Decision trees are a popular form of algorithm used in machine learning predictive modelling.

Classification and Regression Trees (CART) refers to decision tree algorithms that are used for classification and regression learning.

Save your time!

We can take care of your essay

- Proper editing and formatting

- Free revision, title page, and bibliography

- Flexible prices and money-back guarantee

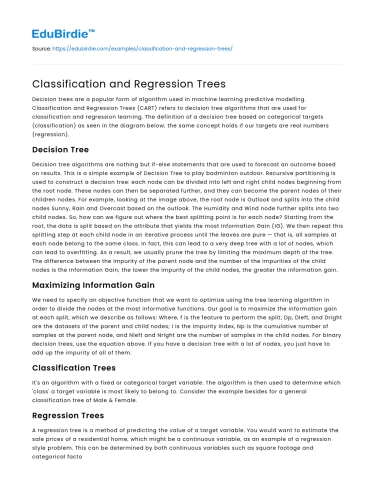

The definition of a decision tree based on categorical targets (classification) as seen in the diagram below; the same concept holds if our targets are real numbers (regression).

Decision Tree

Decision tree algorithms are nothing but if-else statements that are used to forecast an outcome based on results.

This is a simple example of Decision Tree to play badminton outdoor.

Recursive partitioning is used to construct a decision tree: each node can be divided into left and right child nodes beginning from the root node. These nodes can then be separated further, and they can become the parent nodes of their children nodes.

For example, looking at the image above, the root node is Outlook and splits into the child nodes Sunny, Rain and Overcast based on the outlook. The Humidity and Wind node further splits into two child nodes.

So, how can we figure out where the best splitting point is for each node?

Starting from the root, the data is split based on the attribute that yields the most Information Gain (IG). We then repeat this splitting step at each child node in an iterative process until the leaves are pure — that is, all samples at each node belong to the same class.

In fact, this can lead to a very deep tree with a lot of nodes, which can lead to overfitting. As a result, we usually prune the tree by limiting the maximum depth of the tree.

The difference between the impurity of the parent node and the number of the impurities of the child nodes is the Information Gain; the lower the impurity of the child nodes, the greater the information gain.

Maximizing Information Gain

We need to specify an objective function that we want to optimize using the tree learning algorithm in order to divide the nodes at the most informative functions. Our goal is to maximize the information gain at each split, which we describe as follows:

Where, f is the feature to perform the split; Dp, Dleft, and Dright are the datasets of the parent and child nodes; I is the impurity index, Np is the cumulative number of samples at the parent node, and Nleft and Nright are the number of samples in the child nodes.

For binary decision trees, use the equation above. If you have a decision tree with a lot of nodes, you just have to add up the impurity of all of them.

Classification Trees

It's an algorithm with a fixed or categorical target variable. The algorithm is then used to determine which 'class' a target variable is most likely to belong to.

Consider the example besides for a general classification tree of Male & Female.

Regression Trees

A regression tree is a method of predicting the value of a target variable. You would want to estimate the sale prices of a residential home, which might be a continuous variable, as an example of a regression style problem.

This can be determined by both continuous variables such as square footage and categorical factors such as the type of residence, the location in which the property is located, and so on.

When to use Classification and Regression Trees

Classification trees are often used when a dataset must be divided into classes as part of a response variable. In most of the cases, the classes Yes or No are used. In other terms, there are only two of them, and they are mutually exclusive. In certain cases, there may be more than two classes, in which case a classification tree algorithm form is used.

Regression trees, on the other side, are used where the response variable is continuous. A regression tree is used, for example, when the response variable is anything like the price of a home or the temperature of the day.

Regression trees are used in problems that require prediction, while classification trees are used in problems that require classification.

How Classification and Regression Trees Work

A classification tree splits a dataset into categories based on data homogeneity. For example, let's say there are two factors that influence whether or not a customer can purchase a certain phone: income and age.

If the training data shows that 95% of people over 30 bought a smartphone, the data is split at that step, and age becomes the tree's top node. The data is now “95% pure” as a result of this division. When it comes to classification trees, impurity measures like entropy as well as the Gini index are used to calculate the homogeneity of the results.

In a regression tree, each of the independent variables is used to match a regression model to the target variable. After that, each independent variable's data is split at multiple stages.

The difference between predicted and real values is squared at each point to get 'A Sum of Squared Errors' (SSE). The SSE of the variables is compared, and the variable or point with the lowest SSE is selected as the split point. This process is repeated indefinitely.

Advantages of Classification and Regression Trees

The aim of any classification or regression tree analysis is to generate a series of if-else conditions that make for accurate prediction or classification.

Based on a series of if-else variables, classification and regression trees yield correct predictions or predicted classifications. They usually have a number of benefits over typical decision trees.

1. The Results are Naïve

The interpretation of classification or regression tree results is relatively simple. The findings' simplicity aids in the following ways:

• It enables the classification of new findings in a timely manner. This is because evaluating only one or two logical conditions is much easier than computing scores for each category using complex nonlinear equations.

• It can also lead to a simpler model that describes why the results are classified or predicted the way they are. For example, if-then statements make it much simpler to understand business issues than complex nonlinear equations.

2. They are Nonparametric & Nonlinear

Classification and regression tree approaches are well adapted to data mining because they do not require any tacit assumptions.

Regression and grouping Relationships between these factors can be shown by trees that would not have been feasible using other methods.

3. Classification and Regression Trees Implicitly Perform Feature Selection

Feature selection, also known as variable screening, is a crucial aspect of analytics. The top few nodes on which the tree is divided are the most critical variables within the set by using decision trees. As a consequence, function discovery is carried out automatically, and we don't have to repeat the process.

Limitations of Classification and Regression Trees

There are several examples of classification and regression trees where the use of a decision tree did not yield the best results. Some of the drawbacks of classification and regression trees are mentioned below:

• Overfitting: When the tree is overfitted, it takes into account a lot of noise in the data and produces an inaccurate result.

• High variance: In this case, a small variation in the data will result in a large variance in the prediction, impacting the outcome's stability.

• Low bias: A very complicated decision tree normally has a low bias. This makes incorporating new data into the model very challenging.

Conclusion

The classification and regression tree (CART) algorithm is one of the most basic and oldest algorithms. It's a technique for predicting outcomes dependent on a collection of predictor variables.

They're great for data mining because they don't need any data pre-processing.

As opposed to other computational models, decision tree models are simple to understand and execute, giving them a significant benefit.

Stuck on your essay?

Stuck on your essay?